It seems that every day, new ETFs are being introduced in all different flavors. Growing more popular are leveraged and inverse ETFs, which are increasing in number at a rapid rate. Last week, I overheard two people on the train talking about the benefits of leveraged ETFs and how one can earn two or three times the market performance using these instruments. They concluded that investing in leveraged ETFs rather than their unlevered equivalents was a no brainer.

I quickly thought back to an article by Rodney Sullivan from the May/June issue of the CFA Institute Magazine that I had skimmed over the month before and decided that the merits of investing in leveraged ETFs would be a good topic for this forum.

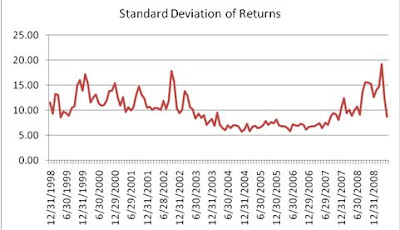

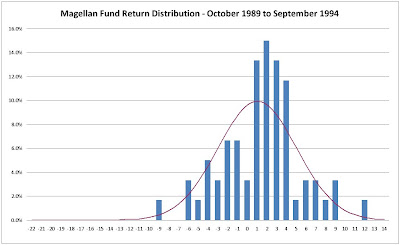

As we all know, volatility hurts our realized returns in the market. The higher the volatility of your daily or monthly returns, the more the geometric average return will deviate from the simple average return. For example, portfolio A, B, and C below all have identical average monthly returns (1%) over the 4 month period. You will earn the most money (4.06%) with A because it has the lowest volatility and the least money with portfolio C since it has the highest volatility. This is crucial to understanding the unforeseen impact volatility can have on the performance of leveraged ETFs.

Leveraged ETFs seek to return a multiple of the market return such as an Ultra 2X or 3X S&P 500 ETF. Over short time periods such as one day, the leveraged ETF will come very close to its goal. However, it only achieves the objective over that very short period. The YTD or one year performance of a leveraged ETF can be wildly different than expected.

In the example below, ETF A and ETF B trade once a month. Both investments earn 12.7% over the year such that a $100 investment in either one would yield $112.70 at the end of the year. ETF A earns that performance with a consistent monthly return of 1% and a standard deviation of 0%. ETF B, on the other hand, has high volatility with a standard deviation of 21%. Both ETFs have leveraged versions of two and three times the base return. One would think that since the annual performance of the base ETFs are equal, so should the annual performance of their leveraged equivalents.

The total one year returns of the leveraged versions of ETF A are roughly as expected at 2.1X and 3.4X the actual return for the year. However, for the high volatility ETF B, we get some alarming results for the leveraged versions. The 2X version returns -28.2% and the 3X version returns as seemingly impossible -85.7%. A $100 investment in the unleveraged ETF will become $112 at year-end while the same $100 investment in the leveraged 3X ETF will leave the investor with a measly $14 at year end.

The long term returns of leveraged ETFs are inherently unpredictable and can be significantly impacted by volatility. Multiplying the long-term performance of the base ETF by 2 or 3 does not produce an accurate estimate of the performance of the 2X and 3X ETFs over that same time period. Invest in these ultra ETFs with caution and understanding of the impact that volatility plays on performance.

Don't miss a post! Receive new blogs by e-mail.